Introduction

Over the last few years when I have written about then Post Office Scandal I have concentrated on the failings of the Post Office and Fujitsu to manage software development and the IT service responsibly, and the abject failure of the Post Office’s corporate governance. I have made only passing mention of the role of the external auditors for almost the full duration of the scandal. This has been for two linked reasons.

Firstly, the prime culprits are the Post Office and Fujitsu; they have not yet been held accountable in any meaningful sense. The legal and political establishment are also partly responsible, and there has been little public scrutiny of their failings. It is right that campaigners and writers keep the focus on them.

Secondly, casting the external auditors as secondary villains is largely because of their role, and certainly not because of exemplary conduct. Their duty was to assess the truth and fairness of the Post Office’s corporate accounts. Should they have uncovered the problems with Horizon, or at least raised sufficient concern to ensure that these problems would be investigated? I believe the answer is yes, they probably failed. However, the failure is largely a result of the flawed and dated business model for external audit – certainly at the level of large, complex corporations. Many smaller audit firms do a good job. The Big 4 audit firms, i.e. EY, PricewaterhouseCoopers (PwC), KPMG and Deloitte, do not. I had no great wish to dive into the complex swamp of the external audit industry. That time has now come!

Statistical nonsense and the purpose of Horizon

Ernst & Young (EY) are one of the Big 4. They audited the Royal Mail from 1986, throughout the development and implementation of Horizon, and through most of the cover-up. EY continued to audit the Post Office after it split off from Royal Mail in 2012. In 2018 they were finally replaced by PwC, one of their Big 4 rivals.

Even if EY did fail I doubt if any of the Big 4 would have done any better. In this piece I will touch only on those factors relevant to the Post Office Scandal.

I was prompted to address the issue of external audit by an exchange on Twitter. Tim McCormack posted a screenshot of a quote by Warwick Tatford, the prosecuting barrister in Seema Misra’s 2010 trial. He offered this argument in his closing statement [PDF, opens in new tab].

“I conceded in my opening speech to you that no computer system is infallible. There are computer glitches with any system. Of course there are. But Horizon is clearly a robust system, used at the time we are concerned with in 14,000 post offices. Mr Bayfield (a Post Office manager) talked about 14 million transactions a day. It has got to work, has it not, otherwise the whole Post Office would fall apart? So there may be glitches. There may be serious glitches. That is perfectly possible as a theoretical possibility, but as a whole the system works as has been shown in practice.”

I tweeted about this argument, calling it a confused mess. It should have been torn apart by a well briefed defence counsel.

Warwick Tatford was guilty of a version of the Prosecutor’s Fallacy, and it is appalling that it took more than 10 years for the legal establishment to realise that Seema Misra’s prosecution was unsafe.

The odds against winning the UK National Lottery jackpot are about 45 million to 1. Tatford’s logic would mean that if someone claims that the £10 million they’ve suddenly acquired came from a lottery win they are obvious lying, aren’t they? Obviously not. The probability of a particular individual being lucky might be extremely low, but the probability of a winner emerging in a huge population approaches certainty.

The prosecutor’s reasoning was like claiming 10 successive coin tosses producing 10 heads is extremely unlikely (a probability of 1 in 1,024), therefore we can dismiss the possibility that if we get 1,000 people to perform the 10 tosses that it will happen. If a participant does report a run of 10 heads that is, per se, proof that they are a dishonest witness. The odds would actually be almost 2 to 1 on (or 62.4%) that it would happen to someone in that group. Even if Horizon performed reliably at most branches that does not mean courts can dismiss the possibility that there were serious errors at some branches.

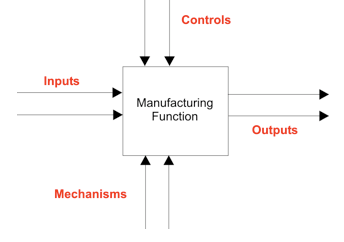

The prosecutor was arguing that if Horizon worked “as a whole”, despite bugs, then evidence from the system was sufficiently reliable to convict, even without corroboration. Crucially the prosecutor confused different purposes of Horizon;

A- a source for the corporate accounts,

B- the Post Office managing branches,

C- subpostmasters managing their own branches,

D- a source of criminal evidence.

Bugs could render Horizon hopelessly unfit for purposes C and D, even B, while it remained adequate for A. External auditors were concerned only with Horizon’s reliability for A. Maybe they suspected correctly that it was inadequate for purposes B, C, and D, but errors at branch level were no more significant than rounding errors; they were “immaterial” to external auditors.

The flawed industry model for external audit means that these auditors have an incentive not to see errors they do not have a direct responsiblity to detect and analyse. Such errors were unquestionably within the remit of Post Office Internal Audit, but they slept through the whole scandal. [I wrote about the abysmal failure of Post Office Internal Audit at some length last year in the Digital Evidence and Electronic Signature Law Review.]

An interesting response to my tweet

Twitter threads are often over-simplified and lack nuance. Peter Crowley disagreed with my observations about Horizon’s role in feeding the corporate accounts.

In explaining why I was wrong Peter Crowley offered an argument with which I largely agree, but which does not refute my point.

“No, it’s not adequate for A. A large volume of microfails is a macrofail, full stop. Just because some SPMs are wrongly overpaid, some wrongly underpaid, and the differences net off does NOT make the system adequate, unless you assess the failing and reserve for compensation.”

In the case of Horizon a large number or minor errors may well have amounted to a “macrofail”, a failure of the system to comply with its business purpose of providing data to the corporate accounts. I don’t know. I strongly suspect that Peter Crowley is correct, but I have not seen the matter addressed explicitly so I cannot be certain.

This is not a question to which I have given much attention up till now, for the reasons I gave above, and in my tweets. Even if Horizon was adequate for the Post Office’s published financial accounts that tells us nothing about its suitability for its other vital purposes – the purposes that were being assessed in court.

The prosecution barrister was almost certainly being naive, as his other comments in the trial suggest, rather than attempting to mislead the jury. It is absurd to argue that if a system is fit for one particular purpose it can safely be considered reliable for all purposes. One has to be lamentably ignorant about complex financial systems to utter such nonsense. Sadly such naive ignorance is common.

Nevertheless, Peter Crowley did home in on an important point with significant implications. I did not want to address these in my tweet thread and they deserve a far more considered respones than I can provide on Twitter.

What was the significance of low level errors in Horizon?

Horizon might, maybe, have been okay for producing the corporate accounts if the errors at individual branches did not distort the financial reports issued to investors and the public. That is possible. However, what would be the basis for such confidence if we don’t understand the full impact of the low level errors?

There was no system audit. Post Office Internal Audit was invisible. The only reason to believe in Horizon was the stream of assertions without supporting evidence from the Post Office and Fujitsu – from executives who quite clearly did not want to see problems.

External auditors’ role is to vouch for the overall truth and fairness of the accounts, not guarantee that they are 100% accurate and free of error. They calculate the materiality figure for the audit. This is the threshold beyond which any errors “could reasonably be expected to influence the economic decisions of users taken on the basis of the financial statements” (International Standard on Auditing, UK 320 [PDF, opens in new tab], “Materiality in Planning and Performing an Audit”).

If the materiality figure is £50,000 then any errors at that level and above are material. Any that are below are not material. The threshold is a mixture of the quantitative and qualitative. The auditors have to assess the numerical amount and the possible impact in different contexts.

External auditors cannot check every transaction to satisfy themselves that the accounts give a true and fair picture. They sample their way through the accounts using a chosen sample size, and a sampling interval that will give them the number of items they want. The higher the interval the fewer transactions are inspected.

The size of the sample and the sampling interval depend on the auditors’ assessment of the materiality threshold for the corporation, the risks assessed by the auditors, and the quality of control that is being exercised by management. The greater the confidence external auditors have in management of risks and the control regime the more relaxed they can be about the possibility that their sampling might miss errors. They can then justify a smaller sample that they will check. The less confidence they have the greater the sample size must be, and the more work they have to do.

Once the auditors have assessed risk management and internal controls they perform a simple arithmetical calculation that can be crudely described as the materiality threshold divided by the confidence score in the management regime. This gives the sampling interval, assuming they are sampling based on monetary units rather than physical items or accounts. For example if the interval is £50,000 then they will guarantee to hit and examine every transaction at that level and above. A transaction of £5,000 would have a 10% chance of being selected.

In addition to the overall materiality threshold they have to calculate a further threshold for particular types of transactions, or types of account. This figure, the performance materiality, reflects the possibility of smaller errors in specific contexts accumulating to an error that would exceed the corporate materiality threshold.

This takes us straight back to Peter Crowley’s point about Horizon branch errors undermining confidence that the overall system could be adequate for the corporate financial accounts; “A large volume of microfails is a macrofail, full stop”. In this case that is probably true, although I don’t think the full stop is appropriate. We don’t know, and the external auditors must take the blame for that.

Question time for EY?

There are several awkward questions the external auditors should answer at the Williams Inquiry. There are many valid explanations for why we might not know what is happening in a complex system, but a failure to ask pertinent questions is not a good reason.

Did EY ask the right questions?

So did EY themselves ask challenging, relevant questions and perform appropriate substantive tests to establish whether Horizon’s bugs, which might have been individually immaterial, combined to compromise the integrity of the data provided to the corporate accounts?

What about “performance materiality”?

Did EY assign a lower level of performance materiality to the branch accounts because of the Horizon branch errors? If not, why not? If so, what was that threshold? What was the basis for the calculation? What reason did EY have to believe that the accumulation of immaterial branch errors did not add up to material error at the corporate level?

The forensic accounting firm Second Sight which was commissioned to investigate Horizon reported in 2015 that:

“22.11 … for most of the past five years, substantial credits have been made to Post Office’s Profit and Loss Account as a result of unreconciled balances held by Post Office in its Suspense Account.

22.12. It is, in our view, probable that some of those entries should have been re-credited to branches to offset losses previously charged.”

Were EY aware of the lack of control indicated by a failure to investigate and reconcile suspense account entries? Did they consider whether this had implications for their performance materiality assessment? Poor management of suspense accounts is a classic warning sign of possible misstatement in the accounts and also of fraud.

What about the superusers?

Did the lack of control that EY reported [PDF, opens in new tab] in 2011 over superusers, which had still not been fully addressed by 2015, influence their assessment of the potential for financial misstatement arising from Horizon, and the calculation of performance materiality? If not, why not? These superusers were user accounts with powerful privileges that allowed them to amend production data.

What about the risk of unauthorised and untested changes to Horizon?

EY also reported in 2011 that the Post Office and Fujitsu did not apply normal, basic management controls over the testing and release of system changes.

“We noted that POL (Post Office Limited) are not usually involved in testing fixes or maintenance changes to the in-scope applications; we were unable to identify an internal control with the third party service provider (Fujitsu) to authorize fixes and maintenance changes prior to development for the in-scope applications. There is an increased risk that unauthorised and inappropriate changes are deployed if they are not adequately authorised, tested and approved prior to migration to the production environment.”

How could EY place trust in a system that was being managed in such an amateurish, shambolic manner?

The lack of control over superusers and dreadful management of changes to Horizon were reported in EY’s 2011 management letter setting out “internal control matters” to the Post Office board and senior management. Eleanor Shaikh made a Freedom of Information request for copies of EY’s management letter from preceding years. These would have been extremely interesting, but the Post Office refused to divulge them on the unconvincing grounds that it was a “vexatious request”. “Embarrassing request” might have been more accurate.

Reserving for compensation and the Post Office as a “going concern”

Given the crucial role of Horizon in providing the only evidence for large numbers of prosecutions, and the widespread public concern about the reliability of Horizon and the accuracy of the evidence, did EY consider whether the Royal Mail or Post Office should provide reserves for compensation (as suggested by Peter Crowley)?

Did EY assess whether the possibility of compensation might be a “going concern” issue? The evidence suggests that the external auditors either did not consider this issue at all, or chose to assume, as their successors PwC explicitly did, that even if compensation were to leave the corporation insolvent it could always draw on unlimited government support and therefore would always “be able to meet its liabilities as they fall due” (the usual phrasing to acknowledge that a company is a going concern). As the sole shareholder, for whose benefit external audit was produced, the government had a duty to pay attention to that risk.

If EY had no concerns about the possibility of compensation we are entitled to infer they had full confidence in Horizon. That only raises the question of the basis for that confidence.

The Ismay Report and the lack of any system audit

So what was the basis for EY’s confidence in Horizon? We know from the Post Office’s internal Ismay Report [PDF, opens in new tab] in 2010 that EY had not performed a system audit. This report, entitled “Response to Challenges Regarding Systems Integrity”, makes no mention of any system audit, whether performed by internal or external audit.

“Ernst & Young and Deloittes are both aware of the issue from the media and we have discussed the pros and cons of reports with them. Both would propose significant caveats and would have limits on their ability to stand in court, therefore we have not pursued this further.”

What were the caveats that EY insisted on if they were to conduct a system audit, and what were the limits they put on providing evidence in court?

That quote from the Ismay Report reveals that EY were familiar with the public concern about Horizon and discussed this with the Post Office. The whole report also shows that EY must have known there had been no credible independent system audit. A further quote from Ismay is interesting.

“The external audit that EY perform does include tests of POL’s IT and finance control environment but the audit scope and materiality mean that EY would not give a specific opinion on the systems from this.”

This makes it clear that in 2010 EY were not in any position to offer an opinion on Horizon. The Post Office therefore knew that EY’s audits did not offer an opinion at system level, and EY knew that there had been no appropriate audit at that level. The question that must be asked repeatedly is – what was the basis for everyone’s confidence in Horizon, other than wishful thinking?

An embarrassing public intervention from the accountancy profession and the trade press

Ten months before the Ismay Report was issued, in October 2009, Accountancy Age reported on the mounting concerns that Horizon had flaws which misstated branch accounts. The article quoted a senior representative of the Institute of Chartered Accountants in England and Wales, and it stated that the newspaper asked the Post Office whether it would perform an IT audit of Horizon. Richard Anning, head of the ICAEW IT faculty, said:

“You need to make sure that your accounting system is bullet proof.

Whether they have an IT audit or not, they need to understand what was happening.”

Accountancy Age reported that the Post Office declined to comment when asked if it would undertake a system audit. The magazine also attached an editorial comment to the story:

“The Post Office should consider an IT audit to show it has taken the matter seriously. Although it may be small sums of money involved, perception is everything and it could not consider going back into bank services with an accounting system that had doubts attached to it.”

Such a call from the ICAEW, and in the trade press, must have caused serious, high level discussion within the Post Office, the wider Royal Mail, and within EY. Yet 10 months later, after these public, and authoritative, calls for the Post Office to remove doubts about Horizon, the corporation issued a report saying that after discussions with EY there would be no system audit. Professional scrutiny of Horizon would suggest that the Post Office shared the widespread doubts.

“It is also important to be crystal clear about any review if one were commissioned – any investigation would need to be disclosed in court. Although we would be doing the review to comfort others, any

perception that POL doubts its own systems would mean that all criminal prosecutions would have to be stayed. It would also beg a question for the Court of Appeal over past prosecutions and imprisonments.”

Did EY press for an appropriate system audit following the 2009 call from the ICAEW and Accountancy Age? If not, why not? What was EY’s response to that lack of action?

How did EY plan and conduct subsequent external audits in the knowledge that Horizon had not been audited rigorously, that the Post Office was determined not to allow any system audit, and that the ICAEW had expressed concern? What difference did this knowledge make? If EY carried on as before how can they justify that?

What did EY think about Post Office internal control?

How confident was EY in the Royal Mail’s and Post Office’s internal control regime, and specifically Internal Audit, in the light of the feeble response to the superuser problem, the pitifully inadequate change management practices, and the lack of any credible system audit?

After working in a highly competent financial services internal audit department I know how humiliating it would be for internal auditors if their external counterparts highlighted basic control failings and then reported four years later that problems had not been resolved. This would be an obvious sign that the internal auditors were ineffective. The fact that Fujitsu managed the IT service does not let anyone at the Post Office off the hook. The systems belonged to the Post Office, who managed the contract, monitored the service, and carried ultimate responsibility.

The level of confidence that external auditors have in internal control is a crucial factor in planning audits. This issue, and the question of whether EY applied strict performance materiality thresholds to Horizon’s branch accounts, contribute to the wider concern about the effectiveness of the external audit business model.

External audit – a broken business model

The assessments of materiality, and of performance materiality have a direct impact on the profitability of an audit. The more confidence that the external auditors have in risk management and internal control the less substantive testing they have to do.

If external auditors pretend that there is a tight and effective regime of risk management and internal control, and that financial systems possess processing integrity, then they can justify setting higher thresholds for materiality and performance materiality and correspondingly higher sampling intervals. Put crudely, the external auditors’ rating of internal control rating has a very strong influence on the amount of work the audit team must perform; better ratings mean less work and more profit.

If auditors judge that the corporation under audit is a badly managed mess, and they are working to a fixed audit fee then they have a problem. They can perform a rigorous audit and make a loss, or they can pretend that everything is tight and reliable, and make a profit. I saw the dilemma playing out in practice with the control rating being manipulated to fit the audit fee. It left me disillusioned with the external audit industry (as I wrote here).

The dilemma presents a massive conflict of interest to the auditors. It undermines their independence, and compromises the business model for external audit.

The auditors have a financial incentive to ignore problems. Executive management and the board of directors of the company under audit have a story they want to tell investors, suppliers, and external stakeholders. The commercial pressure on the auditors is to reassure the world that this story is true and fair. If they cause trouble they will lose the audit contract to a less scrupulous rival.

This conflict of interest is exacerbated by the revolving door of senior staff moving between the Big 4 and clients. Rod Ismay, who wrote the notorious report bearing his name, joined the Post Office direct from Ernst & Young where he had been a senior audit manager. It is a cosy and lucrative arrangement, but it hardly inspires confidence that external auditors will challenge irresponsible clients.

Conclusion – to be provided by the Williams Inquiry?

I am glad that Peter Crowley prompted me into setting out my thoughts about external auditors’ possible, or likely, failings over Horizon. I stand by my statement that Horizon could have been perfectly adequate as a source for the external accounts and yet completely inadequate for the purposes of the subpostmasters. However, it is worth taking a close look at whether Horizon really was adequate for the high level accounts. That turns attention to the conduct of some extremely richly paid professionals who assured the world over many years that the Post Office’s accounts based on Horizon were sound. If they had shown any inclination to ask unwelcome questions this dreadful scandal might have been brought to a conclusion years earlier.

EY will have to come up with some compelling and persuasive answers to the Williams Inquiry to remove the suspicion that they chose not ask the right questions, that they chose not to challenge the Royal Mail and Post Office. Everything I have learned about external audit and its relations with internal audit make me suspicious about EY’s conduct. I will be very surprised if evidence appears that will make me change my mind.

I hope that the Williams Inquiry will prompt some serious debate in the media and amongst politicians about whether the current external audit model works. I doubt if any other member of audit’s Big 4 would have performed any better than EY, and that is a scandal in its own right.

If one of the world’s leading audit firms failed to spot that the Post Office was being steered into insolvency over three decades then we are entitled to ask – what is the point of external auditors apart from providing rich rewards to the partners who own them?

This article appeared in the June 2009 edition of Testing Experience magazine and the October 2009 edition of Security Acts magazine.

This article appeared in the June 2009 edition of Testing Experience magazine and the October 2009 edition of Security Acts magazine.